the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Deep-learning-based buffalo identification through muzzle pattern images

Humar Kahramanlı Örnek

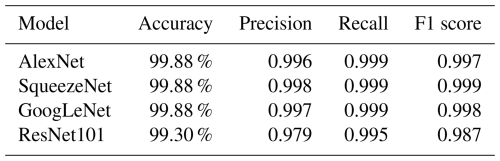

The rapid advancement of artificial intelligence systems has accelerated applications across various fields, including animal biometrics. Accurate identification of buffaloes is crucial for producers and researchers to maintain records and ensure effective tracking. In this study, artificial-intelligence-supported buffalo recognition was developed as an identification method for large livestock. Facial images of 11 buffalos from a facility in the province of Yozgat were utilised to create a dataset for the study. All four algorithms demonstrated successful results. Notably, SqueezeNet outperformed the others, with a remarkable 99.88 % accuracy, 0.998 precision, 0.999 recall, and an F1 score of 0.999. Besides this, ResNet101 was the least successful method, with 99.30 % accuracy, 0.979 precision, 0.995 recall, and an F1 score of 0.987. The accuracy of SqueezeNet and GoogLeNet is 99.88 %, and the recall of these algorithms is 0.999. The precision of SqueezeNet is 0.998, while GoogLeNet's precision is 0.997. The F1 scores of SqueezeNet and GoogLeNet are 0.999 and 0.998, respectively.

- Article

(2670 KB) - Full-text XML

- Companion paper

- BibTeX

- EndNote

Livestock farming is changing from small-scale farming to intensive and specialised breeding. Complex factors such as labour shortages, real-time monitoring difficulties, and high management costs have a challenging impact on large-scale production systems that require the use of technology (Xu et al., 2021). Utilising technology in animal husbandry enhances efficiency and provides various benefits, such as health monitoring, feed management, and eco-friendly practices. These innovations enable producers to achieve sustainable and profitable operations through early detection, data analysis, and efficient communication tools. Identification, verification, and monitoring of farm animals are important for controlling animal movements and preventing diseases (Awad, 2016). Marking animals using traditional methods can negatively affect the animals' behaviour and have harmful consequences. This may result in erroneous data in research results (Mori et al., 2000). Metal ear tags; flexible plastic ear tags (Washington, 1995); and, more recently, subcutaneous RFID (radio frequency identification) tags (Voulodimos et al., 2010) and rumen boluses are used extensively for animal identification. Ear tags worn in the ear are difficult to read and may fall off or be replaced. Using subcutaneous injected RFIDs or rumen boluses requires expertise. In their application, serious accidents may cause animal injuries or even death (Bugge et al., 2011; Lu et al., 2014). New searches and studies are being conducted to reduce the problems and deadlocks encountered in traditional identification methods. For this purpose, biometric identification methods have begun to be used in addition to existing animal identification methods.

With the spread of artificial intelligence, deep learning, artificial neural networks, and automation technologies, these modern technologies in traditional animal husbandry practices have gained importance in recent years (Saleem et al., 2021). Adopting these technologies will significantly reduce human labour, increase efficiency in modern production, and improve product quality (Neethirajan and Kemp, 2021). Visual animal biometrics is a research field combining computer vision, pattern recognition, and cognitive science, which also plays a role in the analysis of animal behaviour (Kühl and Burghardt, 2013). Various biometric features are used to define the unique identities of animals (Dandıl et al., 2019). Features such as muzzle print patterns, iris patterns, retinal vascular patterns, facial images, and DNA profiles are the unique characteristics of each animal, and, by taking advantage of these characteristics, it is possible to distinguish animals through a process called biometric identification (Jiménez-Gamero et al., 2006; Rojas-Olivares et al., 2012). Biometric methods provide high security while maintaining accuracy and reliability by automating authentication and identification systems (Awad, 2016).

In animals, muzzle prints are biometric identifiers, like fingerprints in humans, and are unique to each individual (Minagawa et al., 2002). Distinguishing animals based on muzzle prints is a subject that has been studied for many years (Baranov et al., 1993). Animal muzzle prints can be made using ink printing or digital painting. Moisture accumulation in the animal's muzzle and the inability to keep it still lead to smeared and unreadable images, making it a problematic and time-consuming method to apply (Barry et al., 2007). Using digital images gives easier and faster results. Minagawa et al. (2002) studied this method and used combination pixels on depth traces for muzzle print matching. The researchers identified 30 animals with an overall accuracy rate of 66.6 %. However, the results are unsatisfactory due to low-quality trace images, a limited database size, and limited performance of feature extraction and matching learning algorithms. Conducting a similar study, Noviyanto and Arymurthy (2012) applied the speeded-up robust features (SURF) approach to muzzle print images for cattle identification. A total of 120 muzzle print images from eight animals were obtained with the U-SURF method, and 90 % maximum identification accuracy was achieved by using 10 images in training and 5 images in input samples. Awad et al. (2013) gained 93.3 % identification accuracy based on 105 images from 15 cattle using a previously collected database. Noviyanto and Arymurthy (2013) achieved an accuracy of 90.6 % in identifying cattle by applying the SURF model to a dataset of 80 images. Gaber et al. (2016) used the AdaBoost classifier on a database of 31 cattle. They achieved 99.5 % classification accuracy. El-Henawy et al. (2016) applied the J48 decision tree and naïve Bayes methods to classify animals and stated that the decision tree classifier gave more accurate results than the naïve Bayes classifier. Andrew et al. (2019) used YOLOv2 based on a deep convolution network and CNNs (convolutional neural networks) to classify images from videos with 94.4 % accuracy.

Bello et al. (2020) obtained 98.99 % accuracy in 4000 images by pre-processing, Gaussian filtering, and deep learning using the images they collected using the muzzle database and the stacked deep learning technique. Shojaeipour et al. (2021) achieved 99.11 % accuracy in detection by muzzle by applying the two-stage YOLOv3–ResNet50 algorithm to images of cattle's muzzle tissues.

Water buffaloes are disease-resistant and contented animals that can adapt to various environmental conditions and from which people have benefited from various products such as meat, milk, and leather for centuries (Şahin et al., 2013). Regarding milk production, water buffalo are the second most important species worldwide after dairy cows and produce high-quality milk (Ermetin, 2017). Research on water buffalo breeding and adaptation to precision agriculture technologies (PLF technologies) is limited (Kul et al., 2018; Ermetin, 2021). Due to the aggressive nature of water buffaloes, it is difficult to approach them, to read their ear tags, or to interfere with them; the intervention of a stranger, especially, may cause injuries (Ermetin, 2023). The fact that existing ear tags cannot be read and fall off makes it important to overcome these problems by identifying them with artificial intelligence. The muzzle recognition method makes identifying animals without approaching them easier, allowing for the quick identification of their identity and necessary interventions.

Van Steenkiste et al. (2023) developed a deep-learning model to detect such clots on pictures of the milk filter socks of the milking system. In total, 1282 pictures were taken, with 696 and 586 of them being with and without clots, respectively. CNNs (convolutional neural networks) with residual connections were trained. Hyperparameters were optimised using a genetic algorithm. They achieved 100 % classification accuracy. El Hadad et al. (2015) used two models, namely ANNs (artificial neural networks) and kNN (K-nearest-neighbour classifier), to classify bovines. They collected images from 28 bovine animals. ANNs achieved 92.76 % classification accuracy, while kNN achieved 100 % classification accuracy. Kumar et al. (2018) proposed the deep-learning-based approach to identification of individual cattle based on their muzzle pattern images to address the problem of missed or swapped animals and false insurance claims. Convolution neural networks were used for cattle recognition. A total of 98.99 % identification accuracy was achieved.

Madkour and Abdelsabour-Khalaf (2022) presented a method for identifying animal species using scanning electron microscopic studies of nasal skin. They examined the nasal skin of seven animal species using scanning electron microscopy (SEM). They stated that the skin around the nostrils plays an important role as a means of identification in forensic investigations and improves the field of veterinary forensic medicine in general.

Our research aimed to recognise water buffaloes based on their muzzle pattern image by using artificial intelligence techniques in water buffalo breeding, where precision farming techniques are not applied in terms of temperament and breeding methods; it was also our aim to have this research be included in the literature on this method.

Deep learning, which is the field of artificial intelligence, has made significant strides to solve problems that have resisted the best efforts of the artificial intelligence community for many years. It has proven to be very good at detecting complex structures in high-dimensional data and is therefore applicable to many areas of science, business, and government (LeCun et al., 2015). Convolutional neural networks (CNNs), which are deep neural networks (DNNs), are designed to process data that come in the form of multiple arrays, such as a colour images consisting of three 2D arrays containing pixel intensities in three colour channels (LeCun et al., 2015). It was modelled based on the observation of biological processes and mimics the functions of different layers of the human brain (Shanthi and Sabeenian, 2019). Convolutional neural network (CNN) models with self-learning abilities and superior classification results on multi-class problems could achieve successful accuracy in image classification problems. CNNs consist of a chain of convolution layers, pooling layers, and batch normalisation operations.

There are several well-known architectures of CNNs:

-

LeNet is one of the earliest CNN structures proposed by LeCun et al. (1998).

-

AlexNet was proposed in 2012 by Krizhevsky et al. (2017) and became known after competing in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC).

-

ZF Net is an improvement on AlexNet, presented by Zeiler and Fergus (2014). The method won ILSVRC 2013.

-

GoogLeNet (or Inception V1) was proposed by researchers at Google in 2014 (Szegedy et al., 2015). This method won ILSVRC 2014.

-

VGGNet was proposed by Simonyan and Zisserman (2014). The algorithm was one of the most popular models submitted to ILSVRC 2014.

-

Residual Neural Network (ResNet) was developed by He et al. (2016) and won the ILSVRC 2015.

This research employs four CNN-based algorithms, namely AlexNet, SqueezeNet, GoogLeNet, and ResNet101.

2.1 Material

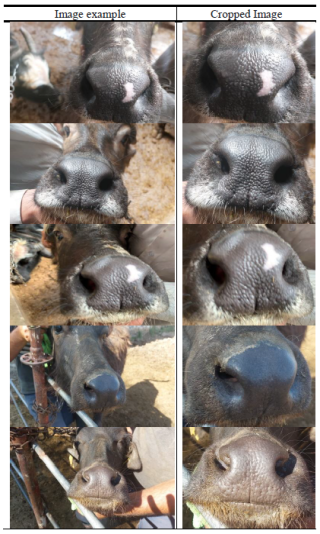

In this study, images were captured of 11 buffaloes on a water buffalo farm located in the central district of the province of Yozgat. A dataset was compiled by recording facial photographs and other images of the water buffaloes at various time intervals using a camera. Images were collected from 40 healthy buffaloes of different ages; the age distribution was taken into account during the study. The images were recorded from a suitable distance, with the buffalo's face being visible from different angles (Fig. 1). There are a total of 4263 images from 11 buffalo in this dataset. The images have RGB colour spacing. The images of the buffaloes obtained throughout the study were numbered 1–11.

CNNs have different architectures like LeNet, AlexNet, GoogLeNet, ConvNet, and ResNet. This study employs four models of them, namely AlexNet, SqueezeNet, GoogLeNet, and Resnet101. The methods used will be introduced in this section.

2.2 AlexNet

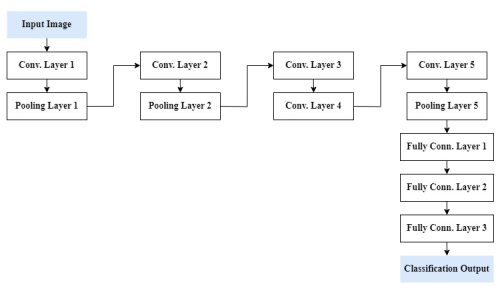

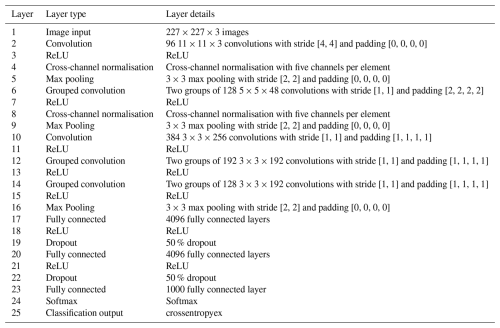

AlexNet, which belongs to a deep CNN structure, was proposed by Krizhevsky et al. (2017). AlexNet achieved high classification accuracy for the ImageNet dataset, a significant breakthrough in the field of machine learning. Since then, researchers have begun to devote more time and effort to researching deep learning models (Lu et al., 2021). AlexNet consists of five convolutional and three full-connection layers. After the first, second, and fifth convolutional layers, maximum pooling is performed. The input format of the source data is pixels, where 227 pixels represent the width and height of the input image, and 3 pixels represent a three-channel RGB mode of the data source (Chen et al., 2021). The structure of AlexNet is shown in Fig. 2, and the detailed architecture is introduced in Table 1.

2.3 SqueezeNet

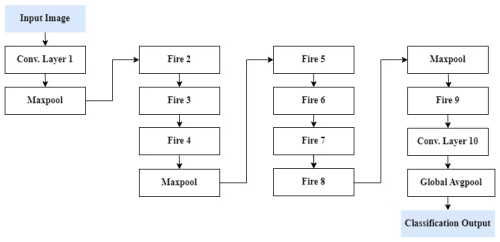

SqueezeNet is a type of CNN that aims to achieve improved efficiency compared to AlexNet by using 50 times fewer parameters. It consists of a total of 15 layers, including three max-pooling layers, two convolutional layers, eight fire layers, one softmax output layer, and one global average pooling layer (Minu et al., 2022). The layered framework of the network is introduced in Fig. 3.

2.4 GoogLeNet

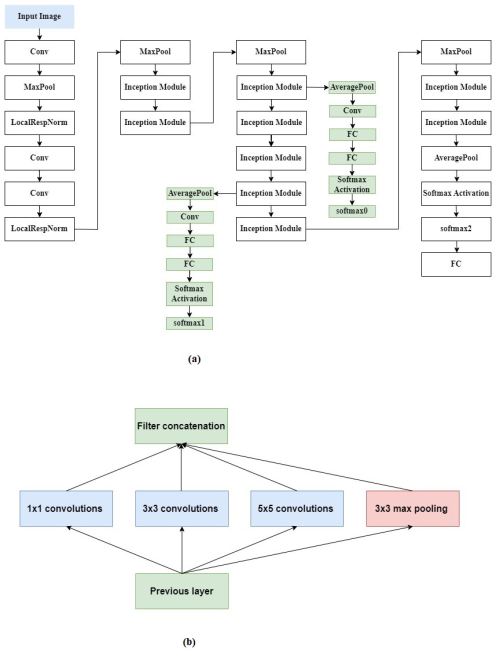

GoogLeNet (Szegedy et al., 2015), also known as Inception, is a popular deep CNN architecture with 22 layers developed to outperform the existing CNN architectures. GoogLeNet is notable for its architecture, which employs Inception modules (IMs). These modules combine filters at different scales to enhance the model's ability to extract information. Additionally, the model aims to reduce the number of parameters and to increase computational efficiency by incorporating various techniques. IM allows multiple convolutions with different kernels and simultaneous max pooling, ensuring that the network trains with optimal weights and identifies more meaningful features (Balagourouchetty et al., 2019). The structure of GoogLeNet and the Inception module is shown in Fig. 4.

2.5 ResNet

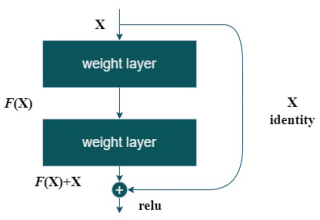

He et al. (2016) introduced a residual block containing two convolutional layers and a non-parameterised shortcut connection that passes the previous block's output to the next unmodified block. The residual block is shown in Fig. 5.

2.6 Performance metrics

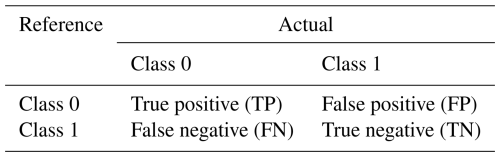

In this study, a confusion matrix and evaluation metrics were employed to measure the success of the classification. Therefore, a complexity matrix for two class problems was created based on the definition of actual values and predicted values in Table 2.

Each column of the confusion matrix represents the instances in a predicted class, while each row represents the instances in an actual class (or vice versa). In the context of binary classification, a true positive (TP) is an outcome where the model correctly predicts the positive class. A true negative (TN) is an outcome where the model correctly predicts the negative class. A false positive (FP) is an outcome where the model incorrectly predicts the positive class, and a false negative (FN) is an outcome where the model incorrectly predicts the negative class. TP, TN, FP, and FN results are used together to calculate evaluation metrics such as accuracy, sensitivity, precision, and F1 score.

The key performance metrics used to evaluate classification performance are as follows:

-

Accuracy. Accuracy is used to measure a model's performance on classification problems. This metric measures the ratio of samples correctly classified. The formula for accuracy is shown in Eq. (1):

-

Precision. Precision is calculated as the ratio of positive examples classified as positive by the model to positive ones that are actually classified as positive. The formula for precision is shown in Eq. (2):

-

Recall. Recall is calculated as the ratio of samples that are positive and classified as positive by the model to samples that are actually positive. The formula for recall is shown in Eq. (3):

-

F1 score. The F1 score is a measure of the harmonic mean of precision and recall. The formula for F1 score is shown in Eq. (4):

In the application stage, the first pre-processing was done. The unwanted areas on the images were cropped. ACDSee Pro software was used for this aim. Table 3 presents sample images and cropped images of some buffalos of the dataset.

The dataset was divided into training and testing sets randomly. The training set contains 80 % of samples. The remaining 20 % of samples are used for testing. Thus, training and testing sets contain 3411 and 852 samples, respectively. The models were implemented in MATLAB R2023b with Intel Core i7 7700HQ CPU, 16 GB RAM, and 8 GB Nvidia GeForce GTX 1070 GPU.

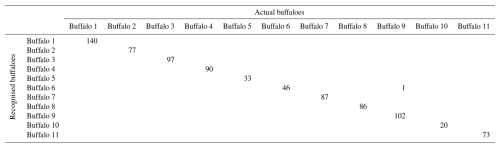

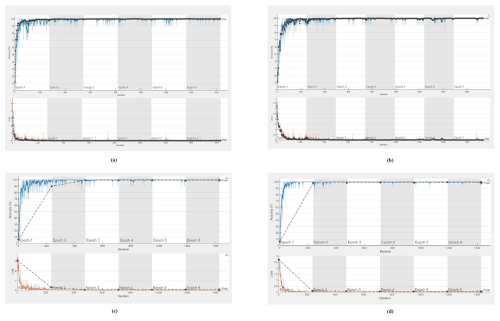

In this study, four different CNN models were experimented with to recognise buffalos from muzzle images. The first used model was AlexNet. For training, the samples were randomly split into training and validation sets. MiniBatchSize was selected to be 10, and the learning rate was set as 0.0001. SGDM was used as an optimiser. As a result, 851 of 852 samples were correctly recognised. The confusion matrix obtained from AlexNet is shown in Table 4, while accuracy and loss graphs are shown in Fig. 6a.

The second used model was SqueezeNet. For training, the samples were randomly split into training and validation sets. MiniBatchSize was selected to be 11, with a learning rate of 0.0002. SGDM was used as an optimiser. As a result, 851 of 852 samples were correctly recognised. The confusion matrix obtained from SqueezeNet is shown in Table 5, while accuracy and loss graphs are shown in Fig. 6b.

The third used model was GoogLeNet. For training, the samples were randomly split into training and validation sets. MiniBatchSize was selected to be 10, and the learning rate was set at 0.0003. SGDM was used as an optimiser. As a result, 851 of 852 samples were correctly recognised. The confusion matrix obtained from GoogLeNet is shown in Table 6, while accuracy and loss graphs are shown in Fig. 6c.

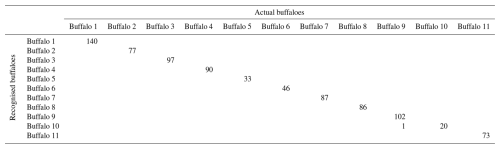

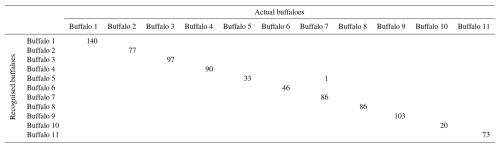

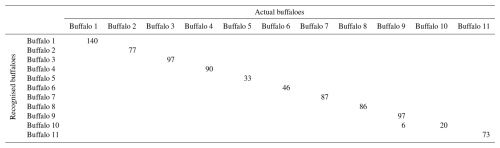

The fourth used model was ResNet101. For training, the samples were randomly split into training and validation sets. MiniBatchSize was selected to be 10, and the learning rate was set at 0.0003. SGDM was used as an optimiser. As a result, 846 of 852 samples were correctly recognised. The confusion matrix obtained from ResNet101 is shown in Table 7, while accuracy and loss graphs are shown in Fig. 6d.

Figure 6Accuracy and loss graphs of the classification process of the (a) AlexNet, (b) SqueezeNet, (c) GoogLeNet, and (d) Resnet101 models.

The classification accuracy, precision, recall, and F1 score obtained with all four models are given in detail in Table 8.

As seen from Tables 3–8 and Fig. 8, all four models were able to successfully recognise buffalo based on muzzle pattern. AlexNet, SqueezeNet, and GoogLeNet identified 851 images from 852, while ResNet101 was a bit unsuccessful in comparing them, with six misidentified images.

This study investigated buffaloes' recognisability using a buffalo muzzle pattern. The original dataset created within the scope of the study has been added to the literature. A recognition task was performed with a total of 11 buffaloes. Four CNN algorithms are employed, and their performance is evaluated. As a result, AlexNet, SqueezeNet, and GoogLeNet achieved 99.88 % classification accuracy, while SqueezeNet achieved the best F1 score. ResNet101 reached the worst result among the investigated algorithms with 99.30 % accuracy, which is a sufficiently successful result for this field. When the literature is examined, there is only one study on buffalo identification based on muzzle print. Singh et al. (2025) used the muzzle images of 198 Surti buffaloes. They applied four deep learning algorithms and achieved 90.8 % accuracy with the AlexNet algorithm. However, there are several studies on cattle identification based on muzzle print. The lowest accuracy was achieved by El-Henawy et al. (2016) at 89.64 % for 52 bovines. Shojaeipour et al. (2021) used the largest number of cattle. They achieved 99.11 % accuracy using YOLOv3–ResNet50. El Hadad et al. (2015) achieved 100 % accuracy using the kNN algorithm on 28 bovines. The other studies achieved approximately 98 % accuracy (Barry et al., 2007; Gaber et al., 2016; Kumar et al., 2018). As highlighted in the literature review, this study achieved successful results. The key contributions of this study include the creation of a buffalo image dataset, the experimentation with CNN models on livestock muzzle image patterns, and the evaluation of the results. In future research, a mobile application could be developed for this purpose. The study's major contributions include the creation of a unique buffalo muzzle image dataset, the application of CNN models for livestock muzzle pattern recognition, and the comprehensive evaluation of these models. These findings demonstrate the potential of CNN-based recognition systems in the livestock sector.

This research has practical implications for livestock management. Such recognition systems can offer significant advantages, including enhancing livestock tracking, reducing human error, and streamlining herd management processes. For farms, the adoption of these systems may provide economic benefits by optimising resource allocation and improving operational efficiency. Additionally, the potential development of a mobile application for this purpose could further enhance accessibility and usability in real-world scenarios. Future studies may focus on the economic feasibility of implementing these technologies at a larger scale, along with investigating their broader contributions to improving productivity and sustainability in livestock farming.

The corresponding author can provide all raw data upon request.

OE: conceptualisation, design, review, field implementation and data collection, and writing and preparation of the paper. HKÖ: methodology; conceptualisation; data collection; statistical analysis; writing, review, and editing of the paper; supervision; data analysis and interpretation; and editing of the study. All of the authors read and approved this final version for publication.

The contact author has declared that neither of the authors has any competing interests.

This study was conducted following the principles outlined in the Declaration of Helsinki and adhering to animal welfare guidelines. Images were obtained from a certain distance using high-resolution cameras without disturbing the animals' natural behaviour.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

This paper was edited by Antke-Elsabe Freifrau von Tiele-Winckler and reviewed by four anonymous referees.

Andrew, W., Greatwood, C., and Burghardt, T.: Aerial animal biometrics: Individual friesian cattle recovery and visual identification via an autonomous uav with onboard deep inference, in: 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), November 2019, 237–243, IEEE, 2019.

Awad, A. I.: From classical methods to animal biometrics: A review on cattle identification and tracking, Comput. Electron. Agr., 123, 423–435, https://doi.org/10.1007/s00500-022-06935-x, 2016.

Awad, A. I., Hassanien, A. E., and Zawbaa, H. M. A.: Cattle identification approach using live captured muzzle print images, in: International Conference on Security of Information and Communication Networks 2013, September, 143–152, Berlin, Heidelberg, Springer, https://doi.org/10.1007/978-3-642-40597-6, 2013.

Balagourouchetty, L., Pragatheeswaran, J. K., Pottakkat, B., and Ramkumar, G.: GoogLeNet-based ensemble FCNet classifier for focal liver lesion diagnosis, IEEE J. Biomed. Health, 24, 1686–1694, https://doi.org/10.1109/JBHI.2019.2942774, 2019.

Baranov, A. S., Graml, R., Pirchner, F., and Schmid, D. O.: Breed differences and intra-breed genetic variability of dermatoglyphic pattern of cattle, J. Anim. Breed. Gen., 110, 385–392, https://doi.org/10.1111/j.1439-0388.1993.tb00751.x, 1993.

Barry, B., Gonzales-Barron, U. G., McDonnell, K., Butler, F., and Ward, S. Using muzzle pattern recognition as a biometric approach for cattle identification, Trans. Am. Soc. Agricult. Biol. Eng. (ASABE), 50, 1073–1080, https://doi.org/10.13031/2013.23121, 2007.

Bello, R. W., Olubummo, D. A., Seiyaboh, Z., Enuma, O. C., Talib, A. Z., and Mohamed, A. S. A.: Cattle identification: the history of nose prints approach in brief, in: IOP Conference Series: Earth and Environmental Science December 2020, 594, no. 1, p. 012026, IOP Publishing, https://doi.org/10.1088/1755-1315/594/1/012026, 2020.

Bugge, C. E., Burkhardt, J., Dugstad, K. S., Enger, T. B., Kasprzycka, M., Kleinauskas, A., and Vetlesen, S.: Biometric methods of animal identification, Course Notes, Laboratory Animal Science at the Norwegian School of Veterinary Science, 1–6, 2011.

Chen, J., Wan, Z., Zhang, J., Li, W., Chen, Y., Li, Y., and Duan, Y.: Medical image segmentation and reconstruction of prostate tumor based on 3D AlexNet, Comput. Meth. Prog. Bio., 200, 105878, https://doi.org/10.1016/j.cmpb.2020.105878, 2021.

Dandıl, E., Turkan, M., Boğa, M., and Çevik, K. K.: Daha hızlıbölgesel-evrişimsel sinir ağlarıile sığır yüzlerinin tanınması, Bilecik Şeyh Edebali Üniversitesi Fen Bilimleri Dergisi, 6, 177–189, https://doi.org/10.35193/bseufbd.592099, 2019.

El Hadad, H. M., Mahmoud, H. A., and Mousa, F. A.: Bovines muzzle classification based on machine learning techniques, Proced. Comput. Sci., 65, 864–871, 2015.

El-Henawy, I., El-Bakry, H., El-Hadad, H., and Mastorakis, N.: Muzzle feature extraction based on gray level co-occurrence matrix, Int. J. Vet. Med., 1, 16–24, 2016.

Ermetin, O.: Husbandry and sustainability of water buffaloes in Turkey, Turkish Journal of Agriculture – Food Science and Technology, 5, 1673–1682, https://doi.org/10.24925/turjaf.v5i12.1673-1682.1639, 2017.

Ermetin, O.: Precision livestock farming: potential use in water buffalo (Bubalus bubalis) operations, Anim. Sci. Pap. Rep., 39, 19–30, 2021.

Ermetin, O.: Evaluation of the application opportunities of precision livestock farming (PLF) for water buffalo (Bubalus bubalis) breeding: SWOT analysis, Arch. Anim. Breed., 66, 41–50, https://doi.org/10.5194/aab-66-41-2023, 2023.

Gaber, T., Tharwat, A., Hassanien, A. E., and Snasel, V.: Biometric cattle identification approach based on Weber's Local Descriptor and AdaBoost classifier, Comput. Electron. Agr., 122, 55–66, https://doi.org/10.1016/j.compag.2015.12.022, 2016.

He, K., Zhang, X., Ren, S., and Sun, J.: Deep Residual Learning for Image Recognition, IEEE Conference on Computer Vision and Pattern Recognition, https://doi.org/10.1109/CVPR.2016.90, 2016.

Jiménez-Gamero, I., Dorado, G., and Muñoz-Serrano, A., Analla, M., and Alonso-Moraga, A.: DNA microsatellites to ascertain pedigree-recorded information in a selecting nucleus of murciano-granadina dairy goats, Small Rumin. Res., 65, 266–273, https://doi.org/10.1016/j.smallrumres.2005.07.019, 2006.

Kühl, H. S. and Burghardt, T.: Animal biometrics: quantifying and detecting phenotypic appearance, Trends Ecol. Evol., 28, 432–441, https://doi.org/10.1016/j.tree.2013.02.013, 2013.

Kul, E., Filik, G., Şahin, A., Çayıroğlu, H., Uğurlutepe, E., and Erdem, H.: Effects of some environmental factors on birth weight of Anatolian buffalo calves, Turkish Journal of Agriculture-Food Science and Technology, 6, 444–446, https://doi.org/10.24925/turjaf.v6i4.444-446.1716, 2018.

Kumar, S., Pandey, A., Satwik, K. S. R., Kumar, S., Singh, S. K., Singh, A. K., and Mohan, A.: Deep learning framework for recognition of cattle using muzzle point image pattern, Measurement, 116, 1–17, https://doi.org/10.1016/j.measurement.2017.10.064, 2018.

Krizhevsky, A., Sutskever, I., and Hinton, G. E.: ImageNet classification with deep convolutional neural networks, Commun. ACM, 60, 84–90, https://doi.org/10.1145/3065386, 2017.

LeCun, Y., Bottou, L., Bengio, Y., and Haffner, P.: Gradient-based learning applied to document recognition, Proc. IEEE, 86, 2278–2324, https://doi.org/10.1109/5.726791, 1998.

LeCun, Y., Bengio, Y., and Hinton, G.: Deep learning, Nature, 521, 436–444, https://doi.org/10.1038/nature14539, 2015.

Lu, S., Wang, S. H., and Zhang, Y. D.: Detection of abnormal brain in MRI via improved AlexNet and ELM optimized by chaotic bat algorithm, Neur. Comput. Appl., 33, 10799–10811, https://doi.org/10.1007/s00521-020-05082-4, 2021.

Lu, Y., He, X., Wen, Y., and Wang, P. S.: A new cow identification system based on iris analysis and recognition, Int. J. Bio., 6, 18–32, 2014.

Madkour, F. A. and Abdelsabour-Khalaf, M.: Scanning electron microscopyof the nasal skin in different animal species as a method forforensic identification, Microsc. Res. Techniq., 85, 1643–1653, https://doi.org/10.1002/jemt.24024, 2022.

Minagawa, H., Fujimura, T., Ichiyanagi, M., and Tanaka, K.: Identification of beef cattle by analyzing images of their muzzle patterns lifted on paper. In AFITA 2002: Asian agricultural information technology & management, Proceedings of the Third Asian Conference for Information Technology in Agriculture, Beijing, China, 26–28 October 2002, 596–600, China Agricultural Scientech Press, 2002.

Minu, M. S., Aroul Canessane, R., and Subashka Ramesh, S. S.: Optimal squeeze net with deep neural network-based arial ımage classification model in unmanned aerial vehicles, Traitement du Signal, 39, 275–281, https://doi.org/10.18280/ts.390128, 2022.

Mori, T., Kuno, Y., Yamakita, O., and Tsukada, M.: U.S. Patent No. 6,081,607, U.S. Patent and Trademark Office, Washington, DC, 2000.

Neethirajan, S. and Kemp, B.: Digital livestock farming, Sensing and Bio-Sensing Research, 32, 100408, https://doi.org/10.1016/j.sbsr.2021.100408, 2021.

Noviyanto, A. and Arymurthy, A. M.: Automatic cattle identification based on muzzle photo using speed-up robust features approach, in: Proceedings of the 3rd European conference of computer science, December 2012, ECCS 110, 114, 2012.

Noviyanto, A. and Arymurthy, A. M.: Beef cattle identification based on muzzle pattern using a matching refinement technique in the SIFT method, Comput. Electron. Agr., 99, 77–84, https://doi.org/10.1016/j.compag.2013.09.002, 2013.

Rojas-Olivares, M. A., Caja, G., Carné, S., Salama, A. A. K., Adell, N., and Puig, P.: Determining the optimal age for recording the retinal vascular pattern image of lambs, J. Anim. Sci., 90, 1040–1046, 2012.

Şahin, A., Ulutaş, Z., and Yıldırım, A.: Türkiye ve Dünya'da manda yetiştiriciliği. Gaziosmanpaşa Bilimsel Araştırma Dergisi, 8, 65–70, 2013.

Saleem, M. H., Potgieter, J., and Arif, K. M.: Automation in agriculture by machine and deep learning techniques: A review of recent developments, Precision Agriculture, 22, 2053–2091, https://doi.org/10.1007/s11119-021-09806-x, 2021.

Shanthi, T. and Sabeenian, R. S.: Modified Alexnet architecture for classification of diabetic retinopathy images, Compute. Electr. Eng., 76, 56–64, https://doi.org/10.1016/j.compeleceng.2019.03.004, 2019.

Shojaeipour, A., Falzon, G., Kwan, P., Hadavi, N., Cowley, F. C., and Paul, D.: Automated muzzle detection and biometric identification via few-shot deep transfer learning of mixed breed cattle, Agronomy, 11, 2365, https://doi.org/10.3390/agronomy11112365, 2021.

Simonyan, K. and Zisserman, A.: Very deep convolutional networks for large-scale image recognition, arXiv, arXiv:1409.1556, 2014.

Singh, R. R., Khalid, F., Ahlawat, T. R., Sankanur, M. S., Azman, A., Agrawal, A., Ghorpade, P., and Romle, A. A.: Individual buffalo identification through muzzle dermatoglyphics images using deep learning approaches, Journal of Advanced Research in Applied Sciences and Engineering Technology, 59, 178–191, https://doi.org/10.37934/araset.59.2.178191, 2025.

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., and Rabinovich, A.: Going deeper with convolutions, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 1–9, https://doi.org/10.1109/CVPR.2015.7298594, 2015.

Van Steenkiste, G., Van Den Brulle, I., Piepers, S., and De Vliegher, S.: In-Line Detection of Clinical Mastitis by Identifying Clots in Milk Using Images and a Neural Network Approach, Animals, 13, 3783, https://doi.org/10.3390/ani13243783, 2023.

Voulodimos, A. S., Patrikakis, C. Z., Sideridis, A. B., Ntafis, V. A., and Xylouri, E. M. A.: Complete farm management system based on animal identification using RFID technology, Comput. Electron. Agr., 70, 380–388, https://doi.org/10.1016/j.compag.2009.07.009, 2010.

Washington, DC: U.S. Patent and Trademark Office. Wardrope, D. D. Problems with the use of ear tags in cattle, The Veterinary Record, 137, 675, https://doi.org/10.1016/j.compag.2016.03.014, 1995.

Xu, R., Lin, H., Lu, K., Cao, L., and Liu, Y.: A forest fire detection system based on ensemble learning, Forests, 12, 217, https://doi.org/10.3390/f12020217, 2021.

Zeiler, M. D. and Fergus, R.: Visualizing and understanding convolutional networks, in: Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part I 13, 818–833, 2014.

The study utilises artificial intelligence to improve buffalo recognition in livestock management. It employs facial images of 11 buffaloes to develop a dataset and utilises four CNN (convolutional neural network) algorithms to identify buffaloes based on muzzle patterns. Results indicate successful performance, with SqueezeNet achieving the highest accuracy of 99.88 %, along with high precision, recall, and F1 score.

The study utilises artificial intelligence to improve buffalo recognition in livestock...